Today’s Cloud provides resources, access and data that makes it indispensable. But virtually none of the services that evolved to today’s Cloud existed before computer timesharing came into being and became available to the end user computing population. Computer timesharing? Did this involve enduring forceful sales presentations while holding a complimentary Mai Tai and ending with a lifetime obligation to pay for a week a year at a condo in Hawaii? Well, in a word-no!

This example (in two sections) may give you an idea of the kind of real industry requirements in the 1970s that made using a computer timesharing service a great solution and alternative to the corporate computing services the typical large company offered its own organizations. And what those requirements did to cause the fundamental pieces of today’s Cloud to be developed and enhanced.

For this first segment I’ll give you an example of the scenarios that drove the computer timesharing industry to great success, by recalling my own involvement in these very specific real life 1970s computing challenges needing a solution. The overview of the business problem that existed for me:

- Gather in-house sales data to generate an accurate report to an industry association by a monthly deadline.

- The second challenge was the payoff of the reporting-analysis of the reports to make business decisions.

- Provide an interactive environment for fast and regular completion of both tasks-gathering accurate data and analysis

- Reduce the time cycle for sending and receiving reports so that subsequent analysis was as timely as possible

My own experience with computer timesharing started with a classic case of a corporate departmental need to get some specific computing done on a recurring basis. In late 1971 Fairchild Semiconductor was a technology, sales and marketing company that offered by that time a huge number of product families and device variations. The industry was very competitive so prices and market share were under constant scrutiny. Fairchild had been an early leader of the industry but was in danger of losing its competitiveness. Many critical sales and marketing strategies were affected by what these numbers indicated.

Fairchild’s Market Research and Planning group was located in Mountain View, California. They did research of their own to suggest trends and strategies but they were often asked to respond to questions from exec level senior management plus others in sales and marketing. Quick answers to some of those questions were very important but not something easy to do in a lot of cases.

The biggest source of meaningful data was from a semiconductor industry association that virtually all the major semiconductor manufacturers belonged to. On a monthly basis each member company reported unit and dollar sales for each device type they produced. The device types were defined by the association so that reporting could be uniformly accomplished no matter what the naming and numbering nomenclature used by each member company.

So the task each month for Fairchild was to first produce a report for the association of standard device type, units and dollars from an in-house report that covered each Fairchild proprietary device number. The first big time-consuming and error prone task was a lot of crunching of Fairchild device categories resulting in a paper report on a form supplied by the association. These reports were sent to a representative of the association who held each companies individual data in secrecy. The reports were consolidated into one big report that aggregated all the association members’ sales by generic device type. There was no identification of any individual company’s sales numbers-just the group’s totals. As a member you received back a copy of this report

Then analysis and in-house reporting could begin. Since you knew what you reported by generic device type and you now had last month’s industry figures by generic device type you could make all kinds of calculations that showed how Fairchild was doing relative to the competition. Standard monthly reports were produced around market share and price/unit trends but there were also a lot of reports requested on an ad hoc basis depending on the business climate at the time. Both of these processes were almost completely manual, utilizing a group of clerks who used the in-house reports to construct the industry report, submit it. then after sitting on their hands for a few days waiting for the return of the consolidated report begin work to generate in-house market share and other reports as needed. Except for the monthly Fairchild sales report (stacks and stacks of fan fold line printer output) which was produced by Fairchild’s mainframes and Management Information Systems (MIS) department, the rest of the processes were manual and at best helped by electric calculators! That is the way it was for virtually every device manufacturer.

My involvement began one day when I was at my very first job fresh out of Georgia Tech and working for Fairchild Semiconductor (and Mike Markkula.) The head of Market Research and Planning (MR&P) came into Digital Product Marketing where I worked in Medium Scale Digital Devices as a product marketer. He was looking for someone to help complete a computer automation task that was suspended in midstream. MR&P had already made a decision that the current manual system described above had to be changed drastically. They had found MIS could not do the interactive parts of the requirements at all, and the application would only be addressed sometime in the future when the backlog of other MIS projects allowed. In those times that was normal. New computer applications often took months before they could be assessed for requirements and design by MIS. And even longer for implementation. Plus ongoing support for operations and the inevitable changes and enhancements posed an even bigger problem.

To be fair most company’s MIS groups were running as hard as they could to stay up with requirements for core company needs: manufacturing scheduling and reporting, sales reporting, payroll (although most came to rely on third party vendors for that) and other basic business needs. To get them to focus on a departmental need required some juice. And even then the line of projects in front of you could be long. They had small staffs for operations and even smaller for evaluating and building new applications. Support for those applications was also sparse. The computers of the time were mainframes that required a special environment virtually all contained in a glass room with raised floors. Power and air conditioning had to be special. Access was as a matter of necessity limited. Remote access was essentially unheard of. Programs were scheduled like buses or airline flights and run as ‘batch’ jobs at mostly designated times. Applications of lesser importance were at the mercy of the more important applications deemed ‘critical’. So you can see why there was an opportunity for a better answer and why people embraced them.

That is the reality of available choices for Fairchild’s MR&P to decide how to meet company and department requirements. Next installment I will tell you what was actually done and how it was accomplished with computer timesharing versus in house MIS.

This real life example business problem had a number of requirements for the solution to be completed:

1 Access to computing power on a recurring basis for data entry, reporting and ad hoc analysis

2 On demand computing without regard to schedules for availability

3 Interactive computing suitable for non-IT professionals to program and operate

4 Support and training as required for use by non-IT professionals

5 Access from several locations and device types

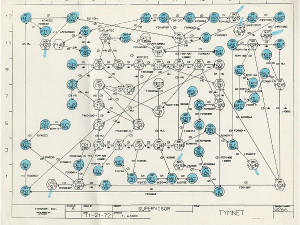

6 A connection from the device to the computer that was reliable and could recover if operational glitches occurred

7 The ability to change or add to the program capabilities as dynamic business demands might require

Hi Mike: I enjoyed reading your first post regarding the business environment that was in place when computer timesharing arrived on the scene. I will look forward to your future posts. One thing that I remember from those days is the way that persons who knew how to use timesharing could startle their managers and peers by how fast they could provide a solution to an informational requirement. In my personal experience, on day one the reaction was of disbelief and a recipient of the information would say “Yikes how did you do that?”. On day ten the reaction was “This is fabulous, we can increase our efficiency by light years with Tymsharing”. On day thirty the reaction was “That analysis was a big hit and really made us look good.” On day sixty the reaction was “Management is really excited about this, and they are putting in budget for more Tymsharing”. On day 100 a typical reaction late in the afternoon was “Here is a five-page sketch of what I need, do you think you can get it to me by 8:00 am tomorrow?”.

Hey Bill-your comments bring back memories I had forgottenfrom the Fairchild project. The stages of appreciation went almost exactly as you say. It finally got to the stage where everyday there were numerous and almost overwhelming new requests for more analysis and additional reports differing considerably from the results we got as our initial goal.

If I had been smart enough to realize the value of producing a semi-generalized report generator I would have jumped on an attempt to build one. We did not even think of the first iota of the concept! But I did realize there were many possible directions for this resource to provide answers at Fairchild.

Instead of inventing a primitive report generator I went into shock realizing I was becoming an indentured servant producing new Super Basic code daily to meet the never ending requests for different looks at our company market share, price trends etc. And that is a good example of the kind of pent-up demand for ad hoc and self service computing that existed then and drove the computer timesharing industry to high levels of success and performance.

My first experience with Tymshare was also in the early 70’s. I started at AMD in 1971, and the company had no computers. Orders were kept in file folders in a big rack. There was an order entry system though, and it ran entirely on Tymshare.

We had TI terminals that had built in acoustic couplers. The hard-copy orders would be typed into the system so we could get a report of backlog, and shipments were also entered. The whole thing was written in Basic, although later we got to use an IBM report generator that ran on the system and was really good.

So the thinking was definitely that computers were for producing reports and not a critical part of the manufacturing/sales process. The order entry system was bought from someone as a package, like you’d buy a rotary file cabinet. No real concept of modffications and quality control. But it got us through those first few years.

Anyway, over time, the backlog had accumulated a lot junk in it. So I took it upon myself to start going through record by record, find inconsistencies, and fix the errors. Got pretty good at tweaking the code and at running the IBM database reporting tool. I still remember in my first exposure to SQL wondering why it wasn’t as easy as that IBM system we had in the 70’s.

Later on, I bought AMD’s first computer, a PDP 11/40, with a KSR teletype and optical paper tape reader. That was a whole new experience.